Glibc PTMalloc Internals

The GNU C Library is a complex library, and it’s default memory allocator PTMalloc (Posix Thread aware malloc) is no exception to this rule. Within this blog post, I will attempt to document the internals of this allocator, as well as it’s evolution throughout the years.

This post is primarily an in depth analysis and a collection of notes which I have amassed throughout the years of being bombarded with heapnote challenges. I am creating this post partially for my sake as its become very difficult to keep track of the differences between each version of Glibc without some form of reference.

I will not be covering any exploitation techniques within this post, as it only serves as a means of gaining a good understanding of the allocator. There will be more posts in the future which detail the various exploitation techniques which target the memory allocator.

If I have incorrectly stated anything, please feel free to inform my of my error via one of my contacts below.

We will first take a high level overview into the allocation algorithm as well as various optimizations and protections which have been implemented over the years.

Overview

Lets first begin with a general definition of what the heap is and why it exists.

The heap, not to be confused with the heap data structure is a means of efficiently allocating dynamic memory to a process. This means that during runtime, a process can request a chunk of designated memory to be allocated and returned. When the process no longer needs this chunk of memory, it can be freed, which deallocates the chunk and allows for it’s reuse.

There are a couple means of allocating memory, one such technique is to rely on the process stack to allocate local variables. This can be used, and is typically more efficient as opposed to relying on a dynamic alloctor; but the downside is the fact that it cannot dynamically change the size of the allocation at runtime. The local memory which resides on the call stack is also deallocated when the function returns and the previous stack frame is restored. This means that the memory is not global and cannot be accessed outside the bounds of the current function (besides nested functions).

Another form of memory allocation is that of mmap, which directly maps entire pages of memory for the process to use. The downside to this is the fact that we cannot efficiently allocate chunks of memory less than a page in size, which would be very inefficient in both time and space.

This is where the concept of dynamic memory management comes in, and how we can use memory allocators as an efficient means of allocating smaller dynamic and global chunks of memory efficiently.

Heap

So how does the heap actually work within Glibc? We would think that the process may initially utilize the mmap system call to map a large region of memory which will be designated for our program heap but thats not the case.

The region of memory which is designated for our process heap is actually allocated via the brk and sbrk system calls. These two system calls change the location of the program break, which serves to define the end of the process’s data segment. By increasing the program break, we essentially allocate memory, while decreasing the break will deallocate.

If ASLR is enabled on the system, the data segment within the process will be randomly placed within the virtual address space. This allows for the efficient randomization of the heap’s base address.

I can’t think of any other reason as to why the brk/sbrk system called are utilized as opposed to mmap or some other alternative. Reading through the source of Glibc only provides the statement that “By default, rely on sbrk”.

Something to keep in mind is the fact that malloc will actually utilize mmap if the size of our allocation is too large.

Chunk

We can refer to the term chunk as a unit of memory per allocation which is returned by the allocator. For example, when we call malloc, we are returned the address of the chunk designated for us by the allocator.

Chunks within the PTMalloc allocator utilize metadata within their headers as a means of storing important information. The following is the definition of the malloc_chunk structure within Glibc.

Something important to note is the fact that all allocations by PTMalloc are multiples of 8. Allocation requests which is not a multiple of 8 is rounded to the next largest multiple. The chunk size you request will be either larger than or equal to the size you request from the allocator.

struct malloc_chunk {

INTERNAL_SIZE_T mchunk_prev_size; /* Size of previous chunk (if free). */

INTERNAL_SIZE_T mchunk_size; /* Size in bytes, including overhead. */

struct malloc_chunk* fd; /* double links -- used only if free. */

struct malloc_chunk* bk;

/* Only used for large blocks: pointer to next larger size. */

struct malloc_chunk* fd_nextsize; /* double links -- used only if free. */

struct malloc_chunk* bk_nextsize;

};

When we allocate a chunk of memory from our allocator, it will only return to us the pointer to the usable region of the chunk. The header of the structure shown above our usable region should not be accessed or modified by the user, but this doesnt necessarily mean that we can’t.

Looking at the structure of malloc_chunk above, the usable region of memory begins at the fd pointer. We can see that the fields mchunk_prev_size, fd, bk, fd_nextsize and bk_nextsize are used ONLY if free. These pointers serve to establish a doubly linked list of free chunks which are cached within something known as bins. We will take a deeper look into these caching bins later on within this post.

This means that within each of our currently allocated chunks, the only field which is in use will be the mchunk_size field, which holds the size of the chunk, as well as various flags.

Before we elaborate on these flags, lets first take a look at an ascii diagram to gain a better understanding of the chunk layout within memory.

chunk-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Size of previous chunk, if unallocated (P clear) |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-

| Size of chunk, in bytes |A|M|P|

mem-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| User data starts here... .

. .

. (malloc_usable_size() bytes) .

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+--+

We can see that the three bits within the diagram named A, M and P reside within the mchunk_size field of the header. These flags are stored on the three lower order bits of the mchunk_size field.

Our first flag P (PREV_INUSE) is used for indicating whether or not the previous adjacent chunk is allocated. When a chunk is freed, malloc will rely on the PREV_INUSE flag of the following chunk to determine whether it should coalesce the newly freed chunk with the previous or next chunks.

The next flag M (IS_MMAPPED) is fairly simple, as it only serves to indicate the method in which the chunk was allocated. If the chunk was too large to be allocated by the default implementation of malloc, then mmap will be utilized to map the memory required. This allows the allocator to keep track of the chunks method of allocation, and its subsequent deallocation.

Then our last flag A (NON_MAIN_ARENA). This flag serves to indicate whether or not the chunk belongs to the main heap or not. By default PTMalloc will utilize a single global heap state for our allocator named the arena. However, when we are within a multithreaded environment the allocator must effectively manage not only the state of the main heap, but that of the other threads as well. I will elaborate on the arena further on as well.

Lets now take a minute to visualize the layout of a chunk within memory. I am using pwndbg. The following is a super quick demo script which simply allocates a single chunk and exits.

#include <stdlib.h>

void main(void) {

malloc(24);

return;

}

Lets attach this to our debugger and see the state of the heap.

pwndbg> heap

Allocated chunk | PREV_INUSE

Addr: 0x555555559000

Size: 0x291

Allocated chunk | PREV_INUSE

Addr: 0x555555559290

Size: 0x21

Top chunk | PREV_INUSE

Addr: 0x5555555592b0

Size: 0x20d51

pwndbg> vis_heap_chunks

-- snip --

0x555555559280 0x0000000000000000 0x0000000000000000

0x555555559290 0x0000000000000000 0x0000000000000021 <-- our chunk

0x5555555592a0 0x0000000000000000 0x0000000000000000

0x5555555592b0 0x0000000000000000 0x0000000000020d51 <-- top chunk

pwndbg> p *(struct malloc_chunk *)0x555555559290

$1 = {

mchunk_prev_size = 0,

mchunk_size = 33,

fd = 0x0,

bk = 0x0,

fd_nextsize = 0x0,

bk_nextsize = 0x20d51

}

pwndbg>

As our chunk is still currently allocated, we can see that the field bk_nextsize is incorrectly populated with the size of the top chunk / wilderness. In our case, the only field which is being used by our allocated chunk is mchunk_size. We can also notice that we have a chunk of size 32 in total and a usable region of 24. The size is shown as 33 due to the PREV_INUSE flag being set in the lowermost bit of the mchunk_size field.

Top Chunk / Wilderness

The top chunk (also referred to as the wilderness), is the chunk which borders the end of available memory on heap. We can also think of this chunk as a region of free memory which has not yet been allocated.

This chunk is given special treatment, as it will never be inserted into any cache bins and is only used as a last resort when other caching mechanisms cannot provide an adequate chunk.

If more memory is required on the heap, then the chunk will extend via the sbrk system call. We can think of the top chunk as the amount of memory left on the heap. The metadata on this top chunk will contain the size of the chunk, as well as the PREV_INUSE flag which is always set.

Last Remainder Chunk

Under certain conditions, when the user requests a chunk in which the cache bins cannot satisfy, a larger free chunk which has been cached can be split and returned to the user.

The remainder of the chunk which has been split is known as the last remainder chunk, and will be placed within the chunk cache again. I will elaborate on this process at a later point in time when explaining the cache bins.

Cache Bins

Let’s now take a closer look into the previously mentioned caching bins.

These bins serve as a means of optimizing allocation speeds and reducing fragmentation on the heap by allowing the quick retrieval of chunks which were previously in use. There are in total 5 different kinds of bins which all serve separate purposes, which we will discuss.

But why would we want to store recently freed chunks within these bins? These bins serve as a means of improving performance and reducing heap fragmentation. By caching the pointers to these freed chunks of memory, the allocator has the ability to quickly check the bins for cached chunks of the same size.

There are various kinds of bins within the current implementation of PTMalloc. Let’s take a look at their use cases, as well as various properties which make them unique.

Tcache

First, we have the tcache (Thread Local Cache), which was introduced within Glibc 2.26. In terms of functionality, the tcache is very similar to the fastbin in the sense that it serves as a set of singly linked LIFO lists which are stored in size specific bins. Unlike the fastbin, the tcache has a specific limit on the quantity of chunks within each bin as it only allows (by default) 7 free chunks per bin.

However, as the name implies, the tcache serves as a thread specific caching mechanism, meaning that there is a tcache per thread.

The following are relevant definitions for the tcache.

typedef struct tcache_entry

{

struct tcache_entry *next;

} tcache_entry;

typedef struct tcache_perthread_struct {

// since glibc 2.30 counts type is uint16_t, used to be unsigned char

uint16_t counts[TCACHE_MAX_BINS];

tcache_entry *entries[TCACHE_MAX_BINS];

} tcache_perthread_struct;

static __thread tcache_perthread_struct *tcache = NULL;

The purpose of this bin is to provide a faster means of accessing cached chunks within multithreaded environments, as there is no longer a need to lock the global arena state when accessing these thread local caches. Something important to note is the fact that the tcache takes priority over all the other bins, it will be the first bin to be accessed in the event of an allocation, and the first candidate to cache a chunk if it is of size for the tcache.

We can see the structure named tcache_perthread_struct, which serves as the global state which stores important information on each threads tcache. Each thread will have a tcache_perthread_struct, hence the name.

There are 64 tcache bins in total with each bin being a maximum of 7 entries in length. Each of the tcache bins store a fixed size chunk determined by the index of the bin. The tcache is capable of containing free chunks between the sizes of 16 to 520 and 32 to 1032 on 32 and 64 bit systems respectively.

Fastbin

The fastbin is a set of singly linked LIFO lists which are stored within size specific bins. There are 10 fastbins and each bin will only contain entries of the same size. The size of the bin is determined by it’s index, ranging from 16 to 88 and 32 to 176 on 32 and 64 bit systems respectively.

As each entry within the fastbin are all the same size, there is no need to access from the middle of the list, so each entry only utilizes its fd pointer (which points to the next entry within the fastbin). An fd pointer of NULL indicates the end of the singly linked list.

Chunks held within the fastbin are treated as allocated chunks from the point of view of the allocator. The chunks held within the fastbin all keep the PREV_INUSE flag set which prevents them from being consolidated with other free chunks. This is done at the cost of additional fragmentation, but the result is that of minimal logic which causes free to be faster.

As a result of this desire for performance, when freeing and caching chunks into the fast bin, the allocator will only check for a double free on the top chunk, as opposed to traversing the entire singly linked list for duplicates. This has various security implications which allow attackers to abuse this bin to gain arbitrary write primitives.

To explicitly consolidate the fastbin, we must use a specialized version of free called malloc_consolidate, which releases all free chunks within the fastbin and coalesces them with other adjacent freed chunks.

The function malloc_consolidate is only called under two scenarios. The first is when a requested allocation of a chunk is larger than FASTBIN_CONSOLIDATION_THRESHOLD. This macro is defined as the following.

#define FASTBIN_CONSOLIDATION_THRESHOLD (65536UL)

The next scenario in which malloc_consolidate is called is when an allocation request is too large to be satisfied by the fastbin and smallbin. In this case the allocator will attempt to consolidate the fastbin with adjacent free chunks in order to satisfy the request.

Small Bin

There are 62 small bins, where each are cyclic, doubly linked list with sizes ranging from 16 to 504 and 32 to 1008 on 32 and 64 bit systems respectively. The size of chunk each bin contains depends on the index of the bin, like the fastbin and tcache. The smallbin follows a FIFO order of operation, which means that entry insertions occur at the head of the doubly linked list, and removals take place at the tail. Each small bin only contains entries of the same size.

The small bin belongs to a bin known as the normal bin. The normal bin is split into two kinds of bins, the small and large bin.

As we might have noticed, the size of freed chunks that the small bin contains also overlaps with that of the fastbin and tcache. This is primarily due to the fact that no freed chunks can directly be placed within the fastbin, it must first go through the unsorted bin.

Large Bin

The large bin (like the small bin), is also referred to as a normal bin.

There are 63 large bins which each maintain a doubly linked list with operations being performed in a FIFO manner similar to that of the small bin. However, unlike the small bin, the large bin as the ability to contain chunks of different sizes. The sizes within the bins must be within a specific range however.

The large bins are divided into six groups, each with a separate range of free chunks which they contain. The sizes in which the large bins contain are approximately logarithmically spaced. What follows is a table which lists the size ranges of each group of large bin, as well as the quantity of bins that belong to each group.

| Group | Quantity of Bins | Spacing |

|---|---|---|

| 1 | 32 bins | 64 bytes |

| 2 | 16 bins | 512 bytes |

| 3 | 8 bins | 4096 bytes |

| 4 | 4 bins | 32768 bytes |

| 5 | 2 bins | 262144 bytes |

| 6 | 1 bin | what’s left / infinite |

| total: 63 large bins |

So the range in which the large bin is capable of holding in total is between sizes 512 bytes to 128 kilobytes, which is when the memory allocator uses mmap to directly map chunks.

The previously discussed fd_nextsize and bk_nextsize pointers within the chunk are utilized within the large bin. They provide pointers to the next chunk which is within the large bin.

Unsorted Bin

The unsorted bin is also a circular, doubly linked list which holds free chunks of any size. There is only one unsorted bin and acts as a sort of queue for the small and large bins. The only free chunks which make it into the normal bins are those that come from the unsorted bin. The unsorted bin is also referred to as a normal bin as it is held within the same buffer as the large and small bins within the heap state.

When chunks that are freed are too large or the tcache or fastbin, they get placed within the unsorted bin. This bin will hold the chunk until the next allocation request from malloc, which will check to see if the size of the chunk within the bin is a perfect match. This functionality provides the allocator one chance to use the chunk before it is sorted into the small and large bins.

If the allocation size is less than the chunk present within the unsorted bin, then the allocator will break off that portion of the chunk and return it to the user. The remainder will be placed back within the unsorted bin to undergo the same process. If the allocation demand is larger than the chunk size within the unsorted bin however, then the chunk will then be moved into either the small or large bin depending on its size. A chunk will be placed within the small bin if it is less than 512 bytes, else the unsorted bin will place it within the large bin.

Let’s take a look at the following code as an example to help better visualize the process which the unsorted bin undergoes.

#include <stdlib.h>

int main(int argc, char** argv) {

void* chunk = malloc(1040); // allocate our chunk large than fastbin & tcache size

void* _ = malloc(24); // prevent consolidation with top chunk

free(chunk); // place within unsorted bin

return 0;

}

Now let’s debug this and check out the layout of the heap, as well as where the chunk we allocated has ended up. If everything went smoothly, then the chunk should have been placed within the unsorted bin.

Let’s place a breakpoint on _exit and check out the state of the heap.

Breakpoint 2, __GI__exit (status=status@entry=0)

27 {

LEGEND: STACK | HEAP | CODE | DATA | RWX | RODATA

────────────[ REGISTERS / show-flags off / show-compact-regs off ]──────

*RAX 0x0

*RBX 0x7ffff7f7d108 (__exit_funcs_lock) ◂— 0x0

*RCX 0x0

*RDX 0x7ffff7f8b640 ◂— 0x7ffff7f8b640

*RDI 0x0

*RSI 0x0

*R8 0x7fffffffdae0 ◂— 0x100000000

*R9 0x7fffffffda6f ◂— 0x7ffff7ffda0800

*R10 0x7fffffffd9f0 —▸ 0x7ffff7ffe2c0 —▸ 0x555555554000 ◂— 0x10102464c457f

*R11 0x7fffffffda70 —▸ 0x7ffff7ffda08 (_rtld_global+2568) ◂— 0x0

R12 0x0

*R13 0x7ffff7f7b660 (__exit_funcs) ◂— 0x0

*R14 0x0

*R15 0x7ffff7f7d120 (initial) ◂— 0x0

*RBP 0x0

*RSP 0x7fffffffdb38 —▸ 0x7ffff7dde4e7 (__run_exit_handlers+423)

*RIP 0x7ffff7e74f20 (_exit) ◂— endbr64

─────────────────────[ DISASM / x86-64 / set emulate on ]────────────────

► 0x7ffff7e74f20 <_exit> endbr64

0x7ffff7e74f24 <_exit+4> mov rsi, qword ptr [rip + 0x105e25]

0x7ffff7e74f2b <_exit+11> mov edx, 0xe7

0x7ffff7e74f30 <_exit+16> jmp _exit+25 <_exit+25>

↓

0x7ffff7e74f39 <_exit+25> mov eax, edx

0x7ffff7e74f3b <_exit+27> syscall

0x7ffff7e74f3d <_exit+29> cmp rax, -0x1000

0x7ffff7e74f43 <_exit+35> jbe _exit+24 <_exit+24>

0x7ffff7e74f45 <_exit+37> neg eax

0x7ffff7e74f47 <_exit+39> mov dword ptr fs:[rsi], eax

0x7ffff7e74f4a <_exit+42> jmp _exit+24 <_exit+24>

──────────────────────────────[ SOURCE (CODE) ]──────────────────────────

22 #include <abort-instr.h>

23

24

25 void

26 _exit (int status)

► 27 {

28 while (1)

29 {

30 INLINE_SYSCALL (exit_group, 1, status);

31

32 #ifdef ABORT_INSTRUCTION

─────────────────────────────────[ STACK ]──────────────────────────────

02:0010│ 0x7fffffffdb48 —▸ 0x7ffff7e38e63 (free+115)

03:0018│ 0x7fffffffdb50 ◂— 0x0

04:0020│ 0x7fffffffdb58 ◂— 0x100000000

05:0028│ 0x7fffffffdb60 —▸ 0x7fffffffdcb8 —▸ 0x7fffffffe069

06:0030│ 0x7fffffffdb68 —▸ 0x7fffffffdcb8 —▸ 0x7fffffffe069

07:0038│ 0x7fffffffdb70 ◂— 0x1

──────────────────────────────[ BACKTRACE ]─────────────────────────────

► f 0 0x7ffff7e74f20 _exit

f 1 0x7ffff7dde4e7 __run_exit_handlers+423

f 2 0x7ffff7dde5b0 internal_addseverity

f 3 0x7ffff7dc6797 __libc_start_call_main+135

f 4 0x7ffff7dc684a __libc_start_main+138

f 5 0x555555555075 _start+37

─────────────────────────────────────────────────────────────────────────

pwndbg> bins

tcachebins

empty

fastbins

0x20: 0x0

0x30: 0x0

0x40: 0x0

0x50: 0x0

0x60: 0x0

0x70: 0x0

0x80: 0x0

unsortedbin

all: 0x555555559290 —▸ 0x7ffff7f7bb00 (main_arena+96) ◂— 0x555555559290

smallbins

empty

largebins

empty

pwndbg> heap

Allocated chunk | PREV_INUSE

Addr: 0x555555559000

Size: 0x291

Free chunk (unsortedbin) | PREV_INUSE

Addr: 0x555555559290

Size: 0x421

fd: 0x7ffff7f7bb00

bk: 0x7ffff7f7bb00

Allocated chunk

Addr: 0x5555555596b0

Size: 0x20

Top chunk | PREV_INUSE

Addr: 0x5555555596d0

Size: 0x20931

pwndbg>

Nice, our chunk ended up within the unsorted bin.

Arena

The arena generally acts as a state for the allocator and heap. This is the structure which contains the bins, as well as other important fields such as a mutex which serializes access on multithreaded processes.

Let’s now take a look at the definition of the arena within Glibc.

struct malloc_state {

/* Serialize access. */

__libc_lock_define (, mutex);

/* Flags (formerly in max_fast). */

int flags;

/* Fastbins */

mfastbinptr fastbinsY[NFASTBINS];

/* Base of the topmost chunk -- not otherwise kept in a bin */

mchunkptr top;

/* The remainder from the most recent split of a small request */

mchunkptr last_remainder;

/* Normal bins packed as described above */

mchunkptr bins[NBINS * 2 - 2];

/* Bitmap of bins */

unsigned int binmap[BINMAPSIZE];

/* Linked list */

struct malloc_state *next;

/* Linked list for free arenas. Access to this field is serialized

by free_list_lock in arena.c. */

struct malloc_state *next_free;

/* Number of threads attached to this arena. 0 if the arena is on

the free list. Access to this field is serialized by

free_list_lock in arena.c. */

INTERNAL_SIZE_T attached_threads;

/* Memory allocated from the system in this arena. */

INTERNAL_SIZE_T system_mem;

INTERNAL_SIZE_T max_system_mem;

};

I will now give a brief overview of each field within the structure, I recommend viewing the source of Glibc to gain a much more whole understanding of the concept.

The first field within the structure is the mutex which we know serializes access to the structure. The next field flags is a signed 32 bit integer which holds additional information about the state of the arena, the flags are as follows.

/*

FASTCHUNKS_BIT held in max_fast indicates that there are probably

some fastbin chunks. It is set true on entering a chunk into any

fastbin, and cleared only in malloc_consolidate.

The truth value is inverted so that have_fastchunks will be true

upon startup (since statics are zero-filled), simplifying

initialization checks.

*/

#define FASTCHUNKS_BIT (1U)

#define have_fastchunks(M) (((M)->flags & FASTCHUNKS_BIT) == 0)

#define clear_fastchunks(M) catomic_or(&(M)->flags, FASTCHUNKS_BIT)

#define set_fastchunks(M) catomic_and(&(M)->flags, ~FASTCHUNKS_BIT)

/*

NONCONTIGUOUS_BIT indicates that MORECORE does not return contiguous

regions. Otherwise, contiguity is exploited in merging together,

when possible, results from consecutive MORECORE calls.

The initial value comes from MORECORE_CONTIGUOUS, but is

changed dynamically if mmap is ever used as an sbrk substitute.

*/

#define NONCONTIGUOUS_BIT (2U)

#define contiguous(M) (((M)->flags & NONCONTIGUOUS_BIT) == 0)

#define noncontiguous(M) (((M)->flags & NONCONTIGUOUS_BIT) != 0)

#define set_noncontiguous(M) ((M)->flags |= NONCONTIGUOUS_BIT)

#define set_contiguous(M) ((M)->flags &= ~NONCONTIGUOUS_BIT)

/* ARENA_CORRUPTION_BIT is set if a memory corruption was detected on the

arena. Such an arena is no longer used to allocate chunks. Chunks

allocated in that arena before detecting corruption are not freed. */

#define ARENA_CORRUPTION_BIT (4U)

#define arena_is_corrupt(A) (((A)->flags & ARENA_CORRUPTION_BIT))

#define set_arena_corrupt(A) ((A)->flags |= ARENA_CORRUPTION_BIT)

The next field within the arena is a buffer of pointers to the fastbins of the arena. The top field will contain a pointer to the top chunk of the heap and last_remainder will also point to the last remainder chunk.

The field bins is interesting, as it contains the unsorted, small and large bins within a single field. We can see that the comment above refers to each of these bins as the normal bins.

Subsequently, the field binmap is another optimization which simply holds information on the state of each bin as to prevent manually searching through the large and small bins to find free chunks.

Directly after this, we have the next and next_free fields within the arena, which seems to contain pointers to other arenas. As we know, we can have multiple heaps within a single process and as a result, we have multiple heap states. These pointers serve as singly linked lists to the next arena. The next field is a cyclic singly linked list which will point to all of the non main arenas which belong to the main arena and the next_free field will point to a non circular list of free arenas, which are arenas with no threads attached.

The next fields are well documented within the source, attached_threads will hold the number of threads attached to the arena; and the system_mem and max_system_mem will contain the memory allocated and the largest amount of memory from the system within the arena.

Heap Info

The heap_info structure serves as a means of coordinating several heaps within the entirety of our process. This structure primarily holds important information of the heap, as well as acting as a singly linked list which points to the previous heap_info structure.

What follows is the definition of the structure within Glibc.

typedef struct _heap_info {

mstate ar_ptr; /* Arena for this heap. */

struct _heap_info *prev; /* Previous heap. */

size_t size; /* Current size in bytes. */

size_t mprotect_size; /* Size in bytes that has been mprotected

PROT_READ|PROT_WRITE. */

/* Make sure the following data is properly aligned, particularly

that sizeof (heap_info) + 2 * SIZE_SZ is a multiple of

MALLOC_ALIGNMENT. */

char pad[-6 * SIZE_SZ & MALLOC_ALIGN_MASK];

} heap_info;

Unlink Operation

As previously stated, the freed chunks which are cached into bins are held as linked lists. However, when retrieving such freed chunks and returning them from the allocator, we also need a mechanism to remove such chunks from their free list. This process is contained within the unlink_chunk function (previously a macro).

The function is defined as follows.

/* Take a chunk off a bin list. */

static void

unlink_chunk (mstate av, mchunkptr p)

{

if (chunksize (p) != prev_size (next_chunk (p)))

malloc_printerr ("corrupted size vs. prev_size");

mchunkptr fd = p->fd;

mchunkptr bk = p->bk;

if (__builtin_expect (fd->bk != p || bk->fd != p, 0))

malloc_printerr ("corrupted double-linked list");

fd->bk = bk;

bk->fd = fd;

if (!in_smallbin_range (chunksize_nomask (p)) && p->fd_nextsize != NULL)

{

if (p->fd_nextsize->bk_nextsize != p

|| p->bk_nextsize->fd_nextsize != p)

malloc_printerr ("corrupted double-linked list (not small)");

if (fd->fd_nextsize == NULL)

{

if (p->fd_nextsize == p)

fd->fd_nextsize = fd->bk_nextsize = fd;

else

{

fd->fd_nextsize = p->fd_nextsize;

fd->bk_nextsize = p->bk_nextsize;

p->fd_nextsize->bk_nextsize = fd;

p->bk_nextsize->fd_nextsize = fd;

}

}

else

{

p->fd_nextsize->bk_nextsize = p->bk_nextsize;

p->bk_nextsize->fd_nextsize = p->fd_nextsize;

}

}

}

Malloc Algorithm

Memory allocators typically follow a very specific and simple design and algorithm. However in the case of PTMalloc, it serves as the default userspace memory allocator for every application, meaning that it should provide a consistent balance across all factors.

Due to this, the design of PTMalloc is overtly complex and (in my opinion) inelegant. PTMalloc attempts to maintain maximum performance whilst also reducing fragmentation on the heap. It achieves this by reusing chunks which have been freed by the allocator or by consolidating chunks and attempting to reusing those.

The precise steps differ from Glibc version to version, in this post I will be covering the algorithm of version 2.31. The best reference for the exact steps of malloc is by referring to the source code. This post will attempt to construct a general outline of the process. There will most likely only be slight variations due to recent addition of new exploit mitigations in later versions.

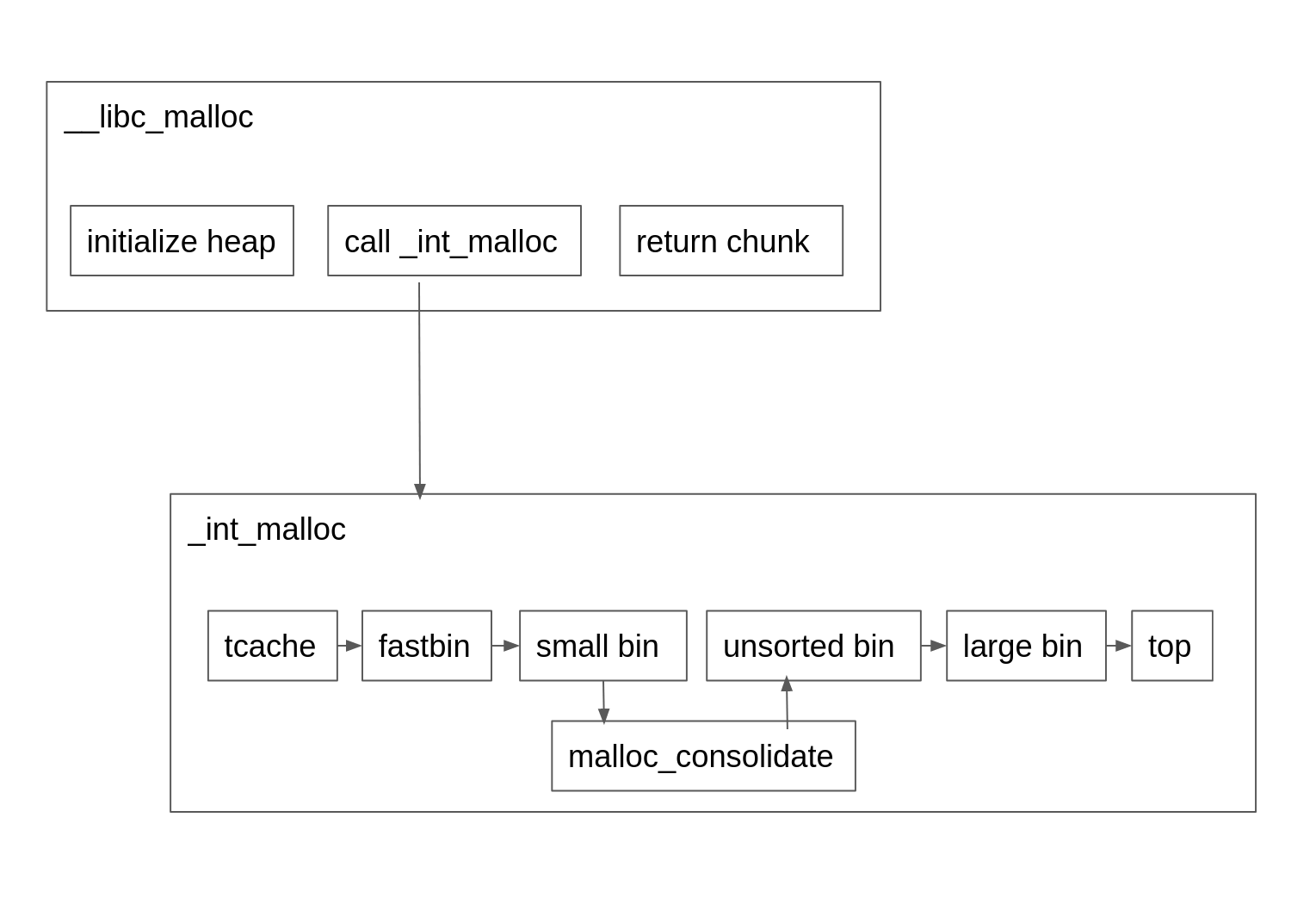

As we have previously stated, the allocator will attempt to use all the previously cached chunks within each of the bins as a means of optimizing allocation speeds and reducing fragmentation on the heap. What follows is the high-level abstracted order in which malloc will search for chunks within the bins. Keep in mind that there is a more convoluted algorithm which lies underneath this, which we will cover.

Tcache -> Fastbin -> Small Bin -> Unsorted Bin -> Large Bin -> Top Chunk / Mmap

Let’s now dive into a high level description of the exact steps in which malloc takes to allocate a chunk of memory. There are two functions which are responsible for the allocation of memory when we call malloc, these are __libc_malloc and _int_malloc.

__libc_malloc

https://elixir.bootlin.com/glibc/glibc-2.31/source/malloc/malloc.c#L3021

- Check if the heap has been initialized, if not then it will initialize.

- Then it will check if a malloc hook has been defined. If so, it will call it with a few debugging parameters.

- If the tcache has been enabled at compile time, it will convert the requested size of bytes into the apropriate tcache bin index and check for an entry. If there is a free chunk within the tcache which fits the description, it will return the chunk. Keep in mind that the tcache was introduced in Glibc 2.26, meaning that prior versions of PTMalloc won’t have this portion of code.

- Then, if glibc has been compiled to support only a single thread, then it will return a chunk returned from a call to _int_malloc on the main arena. However, by default this option is disabled so it will not execute this path. Instead, it will call arena_get, which locks the arena to allow for thread safe interactions between arenas which may potentially be shared among threads. It will then make a call to _int_malloc with the arena.

- If the result of _int_malloc and the arena pointer is not null, then it will attempt to find another arena to retry allocation.

- Finally, if the arena pointer is not null then it will unlock the mutex which synchronizes the arena, and returns the allocated chunk.

_int_malloc

https://elixir.bootlin.com/glibc/glibc-2.31/source/malloc/malloc.c#L3511

- Check if the requested size is within viable range to be allocated and convert it into an internal representation (adding SIZE_SZ / alignment / padding).

- Next, check the arena pointer which was passed as an argument, if the arena is null then it will fall back to sysmalloc to allocate a chunk from mmap.

- If the size is within the range of the fastbin, then it will check the contents of the corresponding fastbin for a free chunk. While its checking the contents of the fastbin, it will also perform a procedure known as tcache stashing. This term simply refers to the process of moving all the chunks from the fastbin into the tcache. The tcache stash operation is only performed under the condition that while the fast bin is not empty and the tcache is not full.

- Then if the request is within the size of the small bin (less than 512), it will then check the small bin for cached chunks. If the tcache is enabled, then the small bin search will also perform a tcache stash, the same operation as the fastbin.

- Else, if and only if the request is not within the range of the fast bin or the smalll bin, then we attempt to check if it is within the range of the large bin. If so, it will make a call to malloc_consolidate, which consolidates all of the fastbins with their adjacent free chunks and places them within the unsorted bin. Due to the fact that the next bins which will be searched for free chunks will be larger sized bins, consolidating the fastbin chunks increases the chances of finding a larger chunk. This also reduces fragmentation on the heap which is caused by the fastbins lack of consolidation. This option is taken if the chunk size is over 1024 bytes.

- Next, it will check and sort the entries within the unsorted bin. Please refer to the unsorted bin section of this post as reference of its internal operations.

- Then it will check the contents of the large bin. Remember, these bins consist of doubly linked lists which hold a logarithmically spaced range of free chunks.

- Finally, if no chunks can be found within all the bins, then the allocator will resort to either mmap’ing the chunk, or splitting one off the top chunk. This is only used as a last case scenario due to the fact that using previously allocated chunks as opposed to allocating new ones reduces fragmentation, and by product reduces heap usage. It also serves as a faster mechanism of allocation.

Keep in mind, it is not necessary to know the entire step-by-step process in which malloc takes to allocate chunks for us, just a general understanding of the allocator should do just fine. However I would still recommend that you read through the source just to familiarize yourself with it.

The following is a high level diagram which covers the general process of the allocation algorithm.

Free Algorithm

We have discussed the memory allocation algorithm and what purpose the caching bins serve in the process, but lets now take a look at how these chunks get there in the first place. Im sure you know but when we deallocate a chunk of dynamically allocated memory, there is an operation known as free which handles the deallocation process.

Here we will be discussing the step by step process in which the PTMalloc allocator takes to deallocate a chunk.

__libc_free

https://elixir.bootlin.com/glibc/glibc-2.31/source/malloc/malloc.c#L3085

- Check for a free hook, if present it will call the hook with a few debugging parameters.

- Then check if the pointer to our chunk is null, if so then it will simply return and do nothing. Calling free with a null pointer will have no effect.

- Next, check if the chunk has it’s IS_MMAP bit set, if so then simply deallocate the chunk with munmap and return.

- Else, if tcache has not yet been initialized, then initialize the tcache. Find the arena in which the chunk belongs to and pass the chunk and the arena to the internal _int_free mechanism, which will handle the primary caching and deallocation mechanism. After this, return.

_int_free

https://elixir.bootlin.com/glibc/glibc-2.31/source/malloc/malloc.c#L4153

- First, we perform a few security checks to ensure the validity of the chunk we want to free. It checks whether or not the pointer to the chunk is valid by checking to see if it is less than the end of the address space, as the allocator never wraps around. It then checks whether or not the chunk size is at least larger than MINSIZE or a multiple of MALLOC_ALIGNMENT. This ensures that the chunk passed to free is of valid size.

- If the tcache is present within the version of glibc, it will check to see whether or not the chunk is already within the tcache bin. It does this check to ensure that a double free vulnerability does not go unchecked. If a duplicate chunk is found, it will abort the process.

- However, if there is no duplicate within the tcache and the tcache bin for the chunk is not full, then insert the free chunk into the tcache.

- Else, if free cannot place the chunk within the tcache and the chunk size falls within the bounds of the fast bin, then insert.

- If none of the previous conditions are met, then we check if the chunk is has not been allocated via mmap. If so, then we will then attempt to place the chunk into the unsorted bin. The chunks within the unsorted bin are not placed into the regular bin until they have been given one chance in malloc.

- Else if the region of memory has been allocated via mmap, then deallocate the region via munmap and return.

Glibc Versions

Lets now take a look at the various changes the allocator has undergone over the years of its use within Glibc. I will omit the versions Glibc which do not have a significant impact on the core implementations of the allocator for the sake of brevity. We will only be listing Glibc versions 2.23 through 2.36.

I will only be covering the significant changes to the allocator and additional information which may be relevant to its development. Please consider this portion of the post as more of a reference as opposed to an explanation of these various additions.

What follows is a table which contains all the relevant security checks and mechanisms implemented through the development of the allocator, as well as a brief description of them. To see the full implementation of each of these checks, you can search for the error string within the source.

| Symbol | Check | Error | Version |

|---|---|---|---|

| _int_malloc | verify that the entry being removed from fastbin is within size range. | malloc(): memory corruption (fast) | 2.23+ |

| _int_malloc | check the size of the entries within the unsorted bin. verify that size is less than or equals 2 * SIZE_SZ and larger than av->system_mem. | malloc(): memory corruption | 2.23+ |

| unlink | verify that the fd and bk pointers of the chunk we want to remove from the free list dont point to itself. | corrupted double-linked list | 2.23+ |

| unlink | if the chunk is not within the small bin range, check if the fd_nextsize and bk_nextsize point to itself. | corrupted double-linked list (not small) | 2.23+ |

| unlink | check if the current chunks size is equal to the mchunk_prev_size field of the next chunk. this check essentially ensures that the size of the current chunk is valid according to the free list. | corrupted size vs. prev_size | 2.26+ |

| _int_malloc | when removing a chunk from the small bin, check to see if the chunk is actually part of the free list. it does this by ensuring that the victim->bk->fd is equal to victim. | malloc(): smallbin double linked list corrupted | 2.27+ |

| _int_malloc | when splitting a chunk in the unsorted bin and inserting the last remainder chunk back into the free list, check if next chunks bk pointer is equal to the current chunk. | malloc(): corrupted unsorted chunks | 2.27+ |

| _int_free | check to see if the cached chunk within the fastbin has a valid size | free(): invalid next size (fast) | 2.27+ |

| _int_free | checks that the chunk on the top of the fastbin is not the same as the chunk being inserted. this is used to prevent consecutive double frees from occurring. | double free or corruption (fasttop) | 2.27+ |

| _int_free | checks that the size of the fast bin chunk at the top is the same size as the chunk that we are inserting into the free list. | invalid fastbin entry (free) | 2.27+ |

| _int_free | verifies that the chunk we want to free is not a pointer to the top chunk. | double free or corruption (top) | 2.27+ |

| _int_free | check whether the chunk we want to free is within the boundaries of the heap arena. | double free or corruption (out) | 2.27+ |

| _int_free | check if the chunk we want to free is in use via the IS_INUSE bit. | double free or corruption (!prev) | 2.27+ |

| _int_free | when placing chunks into the unsorted bin, verify that the next chunk’s bk pointer is equal to the current chunk. this simply checks if the free list which the unsorted bin is maintaining has not been corrupted. | free(): corrupted unsorted chunks | 2.27+ |

Conclusion

That should be all as of now, I will try my best to continue updating this blog post as more renditions of Glibc are released but no guarantees. I have provided additional references below. I would highly recommend reading through the source code of the allocator as it provides great documentation on its implementation.

I will also be detailing various techniques which specifically target the metadata of PTMalloc to gain various exploit primitives, so stay tuned for that.

Thanks for reading and I hope that this has been helpful, good luck ヾ(’▽’).

References

https://ctf-wiki.mahaloz.re/

https://sourceware.org/glibc/wiki/MallocInternals/

https://heap-exploitation.dhavalkapil.com/

https://0x434b.dev/overview-of-glibc-heap-exploitation-techniques/

https://sploitfun.wordpress.com/2015/02/10/understanding-glibc-malloc/

https://elixir.bootlin.com/glibc/latest/source/

https://github.com/shellphish/how2heap/